In 2018, Niantic unveiled its in-development augmented reality cloud platform for smartphones, the Niantic Real World Platform. A demo showed Pikachu and Eevee cavorting in a courtyard, darting in front of and behind potted plants and people's legs as they walked through the scene. This would be the future of Pokémon GO.

That proof of concept is now about to arrive in the game itself with a new feature called Reality Blending, which will roll out to Samsung Galaxy S9 and S10 and Google Pixel 3 and Pixel 4 handsets in early June, with additional devices joining the fun later.

Reality Blending brings the quality of occlusion, or the ability of the app to recognize real-world objects and display AR content in front of and behind those obstacles. Without occlusion, Pokémon float unrealistically on top of objects in the camera view.

"Pokémon will be able to hide behind a real object or be occluded by a tree or table blocking its path, just like a Pokémon would appear in the physical world," said Kjell Bronder senior product manager for AR at Niantic in a statement.

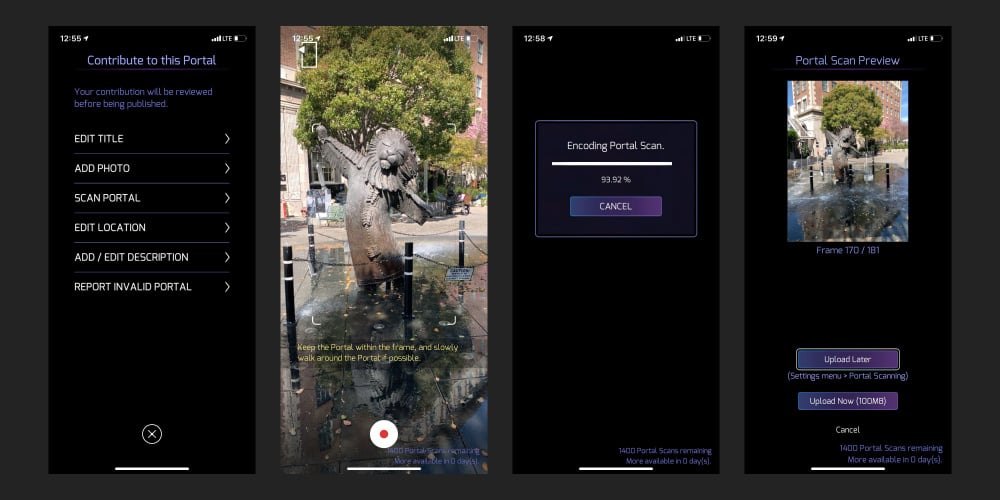

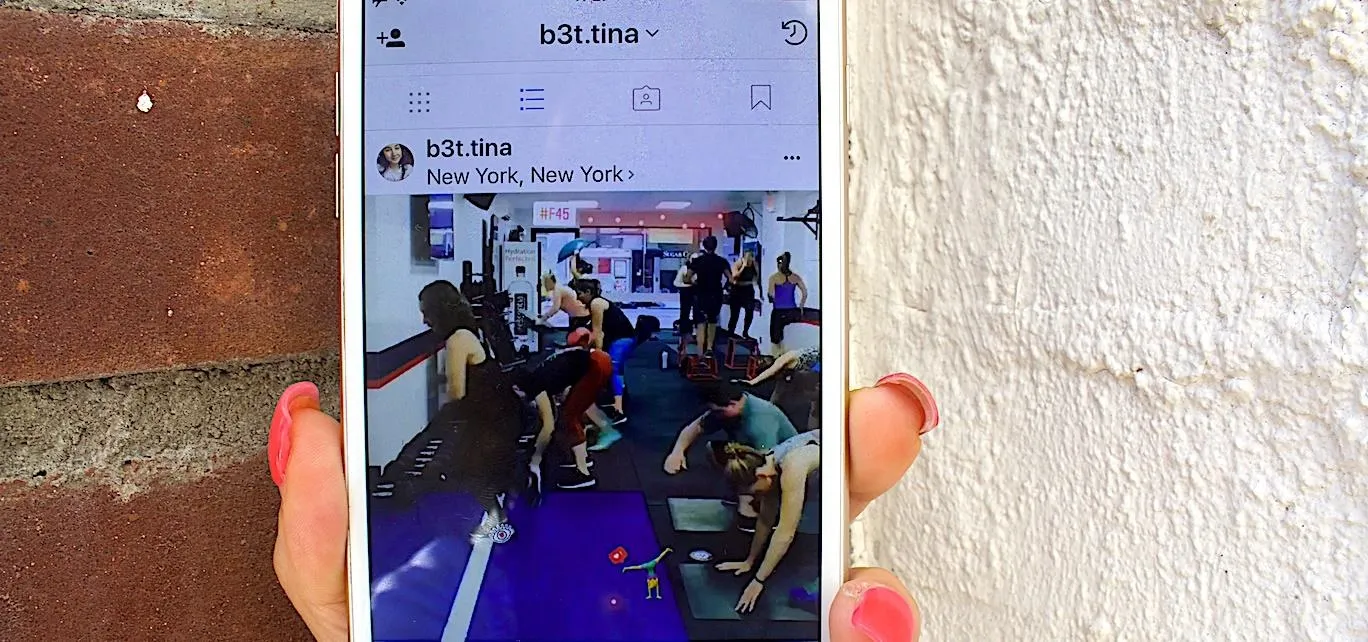

Also in June, Niantic will debut Pokéstop Scanning, an opt-in feature that asks players to help Niantic build the 3D maps that are the foundation of the Niantic Real World Platform.

Niantic had previously deployed this component of Real World in Ingress earlier this year. In Ingress, the feature is called Portal Scanning and it tasks players with pointing their smartphone cameras at the landmarks, walking around them, and then uploading the data to Niantic.

Of course, Portals and Pokéstop are based on the same landmarks, so the data from players of both games will contribute to Real World 3D maps.

"This will allow us in the future to tie virtual objects to real-world locations and provide Pokémon with spatial and contextual awareness of their surroundings. For instance, this awareness will help Snorlax find that perfect patch of grass to nap on or give Clefairy a tree to hide behind," said Bronder.

The feature will roll out first to level 40 trainers. However, based on recent rollouts, the space between level tiers could be just a matter of hours. For example, in the rollout of Buddy Adventures, a tweet announcing the availability of the feature for level 40 trainers was followed by a level 30 tweet less than 30 minutes later.

The Niantic Real World Platform delivers what has become known as AR cloud technology for smartphones and, eventually, AR wearables. The platform supplies multiplayer capabilities, persistent content, and occlusion through a standard smartphone camera, whereas devices like HoloLens 2 and Magic Leap 1 rely on depth sensors for these capabilities.

The company is integrating the technology into its own games first before making it available as a service to other app developers. Late last year, the first of the AR cloud features with Shared Experiences, which enabled multiplayer interactions with Pokémon in AR. The platform also enables Adventure Sync, which enables background step tracking for Pokémon GO and Harry Potter: Wizards Unite.

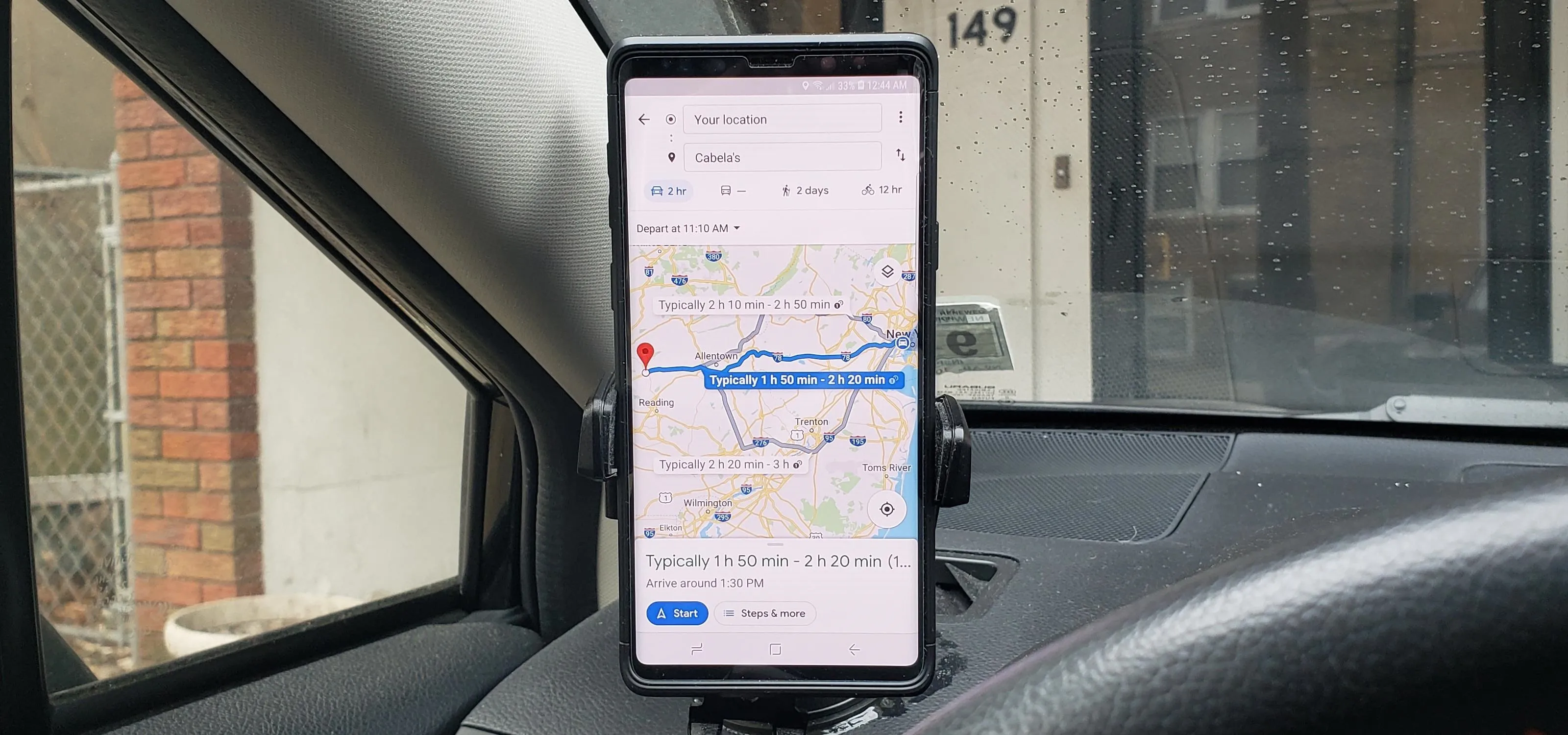

However, at least with regards to occlusion, some skepticism at this stage is warranted. Case in point, Google also debuted occlusion for AR content with its Depth API for its ARCore platform. Unfortunately, the results in the wild have not been as promising so far, as the blending is not a precise. For its part, Apple has only brought occlusion to its latest iPad Pro models outfitted with LIDAR for depth mapping. Even in this announcement, Niantic tempers expectations.

Sometimes Google's Depth API occludes really well.

Other times, it's a little off the mark.

Sometimes Google's Depth API occludes really well.

Other times, it's a little off the mark.

"While there are still a lot of challenges to tackle on the way to making AR seem more realistic, these additions will help continue to create fun and exciting ways for people to interact with the world," said Bronder.

Niantic shared its progress in how it is tackling these challenges last week in publishing some of its computer vision research. The paper details the company's methodology for teaching computers to understand 3D space the way humans do.

While you can't see the ground behind the tree, you know it's there. You recognize the ground. You innately understand that a tree is circular. While we take this for granted, you're doing a very complicated processing task, translating a 2D image to a 3D space. While this ability is intuitive to humans, it's completely foreign to a computer. It's not good at understanding what 'ground' or 'tree' is, let alone guessing 3D space from a single 2D image.

To accomplish this, Firman and his team created a machine learning model that turns 2D images from a standard RGB camera into a 3D map by predicting the geometry of visible and hidden surfaces.

The team will present their findings at the Computer Vision and Pattern Recognition (CVPR) conference in June. In other words, June is a really big month for Niantic and the evolution of AR.

Cover image by Tommy Palladino/Gadget Hacks

Comments

Be the first, drop a comment!